Recently, Christopher Exley (Keele University) published a study in the journal “Scientific Reports” about the content of aluminum in the brains of “control” individuals and used it to compare it to brain samples of patients that suffered Alzheimer’s disease (both familial and sporadic form), autism spectrum disorders and multiple sclerosis. In this paper, I would assume Exley is trying to tame down a major criticism that plagued his papers, the lack of valid controls (including his 2017 study on ASD brains [1], which was recently criticized here. Can this latest study silence the critics of Exley and vindicate his claims? Let’s figure this out.

1. About the study:

Unlike most of his other studies, this one is published in Nature’s “Scientific Reports”, one of Nature Publishing Group open-access journals. Unlike traditional academic publishing scheme, the “open-access” publishing scheme shift the burden of cost (aka “the paywall”) from the reader to the author. In exchange, readers can freely access the content of a study.

Although this “open-access” can help in improving the outreach towards science, it is also a double-edged sword as the financial incentive of such publication may clash with the peer-review process if you are an editor of such journal. It may be very tempting to accept a study that is not fully compliant with a rigorous experimental design and displays flawed results, as the costs of the article processing fees (that can reach up to $3000 per study) can represent a non-negligible source of revenue.

Scientific Reports’ main scope is to publish any study that is scientifically and technically sound, regardless of the novelty or innovative aspect of the study (this is a common decision factor in high impact factor journals such as Science and Nature). However, another outcome I consider as reflective of journal integrity is the number of retractions occurring. Surely, it is not an absolute and objective outcome, but it can be indicative of the relative health of a journal. And this is not looking that good for this journal.

In the last two years, the journal retracted over 30 papers, which is quite alarming for a journal that is nine years old. The vast majority of retractions occurred in the last two years, suggesting some changes either in the peer-review quality (which I doubt would happen unless reviewers suddenly decided to downgrade their review criteria), or a change in the editorial decision tree that may override some reviewers decisions, by accepting a study manuscript despite reviewers decision to “reject” or “revise” such manuscript.

Going back to this study, the author’s list is indeed pretty succinct: we have Christopher Exley (Keele University) as first author and Elizabeth Clarkson (Wichita State University). She is not a biologist, but likely contributed here as a statistician (see her profile here: http://bethclarkson.com). At first sight, I would consider the presence of a statistician in a paper a welcome move to ensure a proper experimental design that is statistically relevant and to provide a decent power of analysis to be able to make sense of the data, in a statistical manner. However, the large amount of discrepancies noted in the manuscript was enough to trigger concerns in myself, armed only with undergraduate bio-statistics classes taken during my junior and senior years. We will discuss more into details in the later portion of this article.

2. The introduction:

The introduction is, to say the least, confusing, unclear with a touch of arrogance that is typical from Exley. Here, there is no “funnel” approach (introducing the topic in general, with a converging flow to bring it to the main goal of this study). What is even more concerning is the author’s over-reliance on self-citations. Out of 13 citations in the introduction, 9 were from studies authored by Exley. An introduction should be written to provide a rapid and summarized overview of the existing literature and cite studies that are either supporting or contradicting of the main hypothesis that will be challenged by the study. By ignoring (or minimizing) the existing literature, the author is indicating to us that he is likely coming on in his hypothesis with a confirmatory bias, which can be indicative of a risk of cherry-picking the data or worse, fabricating data to fit a pre-formatted conclusion.

It is also important to note the following information: “Brain banks have themselves struggled with the concept of what constitutes a true control14. We asked one such brain bank to identify a set of donor brain tissues that could act as a control for brains affected and diagnosed with a neurodegenerative disease. The majority of control brains available through brain banks are from older donors and so most still show some signs of age-related degeneration. Herein we have measured the aluminium content of twenty control brains where in each case there was no overt neurodegeneration, no diagnosis of a neurodegenerative disease but some age-related changes in the older donors. We have then compared these data with data, measured under identical conditions, for donors having died with diagnoses of Alzheimer’s disease, multiple sclerosis and autism.”

It is important to note that this is not the first study of Exley looking at aluminum levels in brain samples from “healthy donors”, he has indeed published a study in 2012 in the Metallomics journal [2]. What would be considered a good control? That’s a good question. I would assume any donated sample from a patient that died from a disease other than neurological diseases, with a patient history excluding any co-founding factor such as history of chronic kidney diseases (since aluminum is eliminated over 95% via renal route) and/or prolonged feeding using IV bags (total parenteral nutrition). Since we assume the brain-to-blood clearance of aluminum to be a very slow process, we would assume age is a determinant factor (assuming the older a donor is, the higher the aluminum accumulation occurred). Here, we will compare this study to his 2012 Metallomics study [3] for an important reason: the reproducibility of his findings. This is an important criterion in the scientific method: anyone should be able to reproduce your findings using the same protocol, same technique and same type of sampling population. If – as here – the same lab was unable to reproduce the same findings using the same method, there is a problem.

3. The methodology:

From the methods section: “Brain tissues were obtained from the London Neurodegenerative Diseases Brain Bank following ethical approval (NRES Approval No. 08/MRE09/38+5). Donor brains were chosen on our behalf by the consultant neuropathologist at the brain bank. All had a clinical diagnosis of ‘control’ while some had a pathological diagnosis that included age-related changes in tissue. There were five male and fifteen female donors. They were aged between 47 and 105 years old. Tissues were obtained from frontal, occipital, parietal and temporal lobes and cerebellum from all donors.” The original Metallomics [2] study’s samples were from the MRC CFAS study, and unfortunately, I could not pinpoint the exact location of that brain bank. However, we can assume that these two brain banks are likely distinct. That said, I would not expect major differences between brains collected using the same inclusion criteria and from the same country (both are from donors that resided in the UK). Important notice, in addition to the different cortical regions (temporal, frontal, parietal, occipital), Exley added samples from the cerebellum into that study. To allow a direct comparison, we will ignore this extra sampling site for our review.

The technique used for the study is the same: about 1 gram of tissue were minced off, chemically digested and analyzed using the graphite furnace atomic absorption spectrometry (TH GFAAS). GFAAS is analytical technique used in assessing aluminum in biological samples, with variable sensitivity limits (see the table in the ATSDR website: https://www.atsdr.cdc.gov/ToxProfiles/tp22-c7.pdf).

4. The results:

As expected, we have the same issues that swamped his previous studies: the extreme variability of aluminum contents in two technical replicates from the same sample and how does Exley consider the use of technical replicates.

When you perform an experiment, you are always at risk of having differences in values obtained between experiments. This can be due to various factors: heterogeneity within the sample, errors during the sample processing and analysis, or difference in aluminum contents between individuals. However, you can circumvent and correct such differences in the same individual by increasing accuracy using technical replicates. By using several sampling sites of a same brain tissue sample, you can increase the accuracy of your measure by getting an average value. The higher this number of technical replicates is, the more accurate your estimation is. However, this can be compromised if your technical replicates are inconsistent. That’s the issue haunting his graphite furnace assay, which ends up with huge variation between samples.

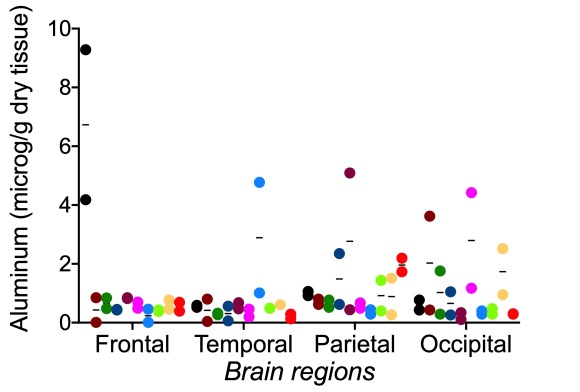

Let’s plot the first 10 patients’ brains and see how the technical replicates look like.

The further the dots (technical replicates, each dot color originating from the same donor sample) are, the higher the variability within a biological replicate is, with the horizontal bar representing the average. As you can see, we have several instances of huge variability within an individual. Which amount is correct? The lower measurement, or the higher measurement? We can answer that by increasing the number of technical replicates, but you may be limited by the amount of biological material (in this case brain tissue) you have access to. You have also an important variability between individuals, as you can see some individuals show very high levels of aluminum compared to others, in a defined brain region. You cannot exclude these individuals, unless you have a very good reason to (but normally that would be excluded prior the data analysis, by excluding tissue samples from patients aligning one of your exclusion criteria). However, excluding them – even for a reason that seems valid – creates a risk of cherry-picking your data and biasing your statistical analysis. One thing must be clear: You can only use biological replicates (aka individuals) in your statistical analysis. You cannot use technical replicates from the same individual and consider them as separate biological replicates. This is especially where the data becomes murky and unclear when it comes to Table 4. As you can see, the N number cited by Exley for the sporadic form of Alzheimers disease (sAD) cite N=1394 and N=1322 with P-values obtained using the Wilcoxson analytical methods. Where these numbers come from? I don’t know, and Exley fail to clarify this important information.

Unless Exley clarify and provide the aluminum concentrations of each of these N numbers individually (and make it clear which ones are technical replicates from each patients), I will not trust any statistical analysis.

The second issue I have with the results is how to they compare to his 2012 Metallomics paper. Remember, reproducibility is the key event to have a finding scientifically accurate. The inability to have another scientist reproduce your findings using the same methodology than yours (or worse not able to reproduce your own data) is a serious red flag that usually ends up as a retraction. This is the very same issue that cost Judy Mikovits her Science study, as nobody including herself were able to reproduce the ability to detect XMRV in blood samples from patients suffering from chronic fatigue syndrome.

Therefore, using the average concentrations per individuals from his 2012 study, I plotted them against his 2020 study (excluding the cerebellum samples from the analysis, as such samples were not present in his 2012 study). Here is the graphical statistics.

This is concerning.

Out of four brain regions, three showed a significantly lower amount of aluminum in the 2020 samples compared to the 2012 samples (t-test, unpaired, Welch’s correction assuming inequality of variances between groups). It is telling us that not only Exley cannot reproduce his own findings from a decade ago, but he may also consistently underestimate such value, considering that the power of analysis of his 2012 paper surpasses this one by its sample size (the 2012 study has a N number of individuals equal to 60, the 2020 has a N number of only 20. Remember, in statistics, the higher the N the better it is).

How does Exley explain that difference? I would assume the age has nothing much to do, differences in the inclusion criteria of donors should be similar, the experimenter must be the same (Exley authored both papers), the technique used for analysis is the same (TH GFAAS). This leaves us with an emptiness. Nowhere in the discussion does the author discuss this discrepancy, which is important as it changes the whole data interpretation.

The third issue comes with the “pathological samples”. Exley is pretty frugal in telling us where such samples are coming from, even less willingly sharing the detailed information about these samples (in terms of patient’s individual information such as age and sex, aluminum content in terms of technical replicates in brain regions). Exley mentions that data are freely available upon request by email, but I am surprised he did not provide such tables as supplementary materials visible to the public. Is Exley on a fishing expedition to hunt down his detractors? I don’t know but asking him to disclose such data will requires his detractors to fully disclose their identity.

However, I have prepared a table that summarize his data and the previous data I was able to collect from his previous publications [1, 2, 4]. I used individual average brain concentrations and obtained the statistical analysis using the built-in function of Prism 8.0 (GraphPad Software, La Jolla, CA).

If I have to compare the average values reported in this paper, compared to his previous publications. I would assume the values reported for FAD and ASD comes from his previous work [1, 2], as the average brain concentrations from patients calculated from his table . My question is why does he provide us only with statistics (mean, median) and not providing us with the raw numbers? I would consider Exley would use a transparency approach by displaying all the raw data for AD, ASD and MS samples, in a table form as he did in Table , to allow everyone to perform a statistical analysis to verify his claims.

Yet, he decided not to (but will provide such data upon request).

You can also see that if you pick one control versus another (lets say the controls from 2020 versus the ones from 2012), you can reach a very different conclusion. You can see that if you cherry-pick the right controls, you can tell a very different story. My common sense would be that we should merge both controls into a single group to enhance its statistical power of analysis and re-run the statistics of the diseased groups versus that control group.

What if we merge both the 2012 and 2020 controls into one group? Well it gives an unfavorable outcome to Exley as we can see here in this graph.

The fourth and final issue I have is in the statistics, even more so when you have a statistician onboard. Exley used the analysis of variance (ANOVA) for the statistical analysis. To be able to perform an ANOVA, you need to fulfill three requirements: independence (in other words individuals are not biologically related to each other’s), homoscedasticity (the standard deviations are equals) and the samples follow a normal (Gaussian) distribution. Ideally, you want to achieve a similar N numbers between the groups.

Here is what Exley is telling us: “The distribution of aluminium content data is heavily skewed in the treatment groups. Data are not balanced with the number of observations and their respective lobe varying considerably between treatment groups. There is large variability in repeated measurements taken from the same donor. Analyses were performed for both the unweighted observations and means across all lobes for each individual. The assumption that the data across all groups are normally distributed, an assumption that underlies any ANOVA model, is questionable at best.”

Wait a minute, is Exley telling us that the data quality is so poor that we should be a fool to expect to get an accurate statistical analysis with the tools he chose to use? If I quote Mark Twain “There are three kind of lies: lies, damned lies and statistics”. If there is something to be learned about bio-statistics is to be careful about its use and the meaning into a context. The search of a positive statistical outcome is not new and is a common bias that can plague studies, commonly referred by Johnathan Ioannidis as “P-hacking”. Surely, you will find an obscure or rarely used statistical method that will tell you there is a significance when there is not.

https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1002106

To be honest, I am not sure you can make a sense of the statistics because of the high variability. Sure, you can find an obscure statistical test that will tell you have a statistical significance. But that’s has a name. It is called “p-hacking” and it is bad.

I am personally concerned that Elizabeth Clarkson gave her seal of approval on that.

As mentioned in the acknowledgments section: “E.C. carried out all statistical analyses. C.E. and E.C. wrote and approved the manuscript.”

I would have been more comfortable in having a bio-statistician consulting for the journal to review the statistical analysis because I am not at all convinced by the data.

Final point, on a more sarcastic tone: the last thing I would ever consider in my manuscript is to self-referencing infomercials that were written in non-scientific journals, especially when it is written on a website with questionable credentials as Exley concludes the discussion with that sentence:

“We may then live healthily in the aluminium age (https://www.hippocraticpost.com/mens-health/the-aluminium-age/).”

5. Citations:

- Mirza, A., et al., Aluminium in brain tissue in familial Alzheimer’s disease. J Trace Elem Med Biol, 2017. 40: p. 30-36.

- House, E., et al., Aluminium, iron and copper in human brain tissues donated to the Medical Research Council’s Cognitive Function and Ageing Study. Metallomics, 2012. 4(1): p. 56-65.

- Chen, M.B., et al., Brain Endothelial Cells Are Exquisite Sensors of Age-Related Circulatory Cues. Cell Rep, 2020. 30(13): p. 4418-4432 e4.

- Mold, M., et al., Aluminium in brain tissue in autism. J Trace Elem Med Biol, 2018. 46: p. 76-82.

4 replies on “[Neurosciences/Junk Science] “Aluminium in human brain tissue from donors without neurodegenerative disease: A comparison with Alzheimer’s disease, multiple sclerosis and autism”: Another Exley study, another evidence of questionable quality study.”

Christopher Exely is an anti-vaxxer. He is undoubtedly trying to show that aluminum in vaccines is responsible for ASD, Alzheimer’s, or whatever the diagnosis of the day happens to be.

None of these problems should be surprising.

LikeLiked by 1 person

You see thats the problem that seems with Exley. For some reasons, he is fixated that aluminum is behind any neurological diseases under the sun and this is likely stemmed from early studies on Alzheimer’s and aluminum. But as science moved on from that hypothesis (as studies could not find evidence that hypothesis), Exley seems to be stuck in this infinite loophole, trying to prove us that the Loch Ness monster (or Sasquatch) exists and is a thing. And like cryptozoologists, the evidence put on the table is weak and unconvincing, least to say.

Thats a problem that is consistent with many of his studies in that regards: they lack proper controls, rely on one single technique, present high degree of variability even when performed by the same lab, and even contradicts other (if you put side-by-side his work and Walter Lukiw work, which is honestly not better in terms of scientific rigor, they contradict each others claim).

If Exley wants to emulate Semmelweis (he stood up to antiseptic procedures way before the germ theory and Koch postulate), he will need to produce papers that exceed in terms of quality the existing literature. Look at his papers, and look at recent studies from Karen Weisser’s lab, it’s day and night when it comes to rigor and experimental design.

LikeLike

These kind of write ups are getting increasingly desperate. It would be amusing if it weren’t so sad.

LikeLike

Unfortunately, 2020 brought to light the limitations of the peer-review process and its need to ramp up its standards. This is why I always try to diversify my portfolio when it comes to publish my own papers, because I want to be sure my papers are reviewed fairly with a minimum of complacency. The last thing I want is to see my studies being scrutinized on Pubpeer and being ripped apart like a carcass in a shark frenzy.

LikeLike